社区微信群开通啦,扫一扫抢先加入社区官方微信群

社区微信群

社区微信群开通啦,扫一扫抢先加入社区官方微信群

社区微信群

http://download.csdn.net/download/liangmaoxuan/10228805

1. kafka_2.10-0.10.2.0.tar1.解压kafka_2.10-0.10.2.0安装包

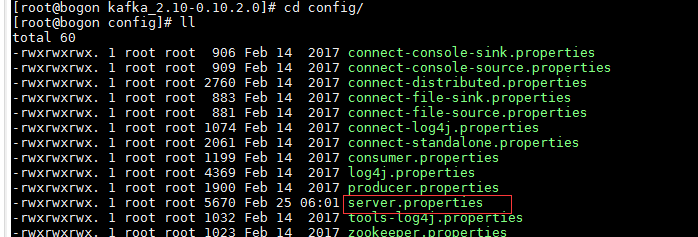

tar -xvf kafka_2.10-0.10.2.0.tar 2.配置kafka

cd /software/kafka_2.10-0.10.2.0/conf

(1) server.properties

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# see kafka.server.KafkaConfig for additional details and defaults

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

# Switch to enable topic deletion or not, default value is false

#delete.topic.enable=true

############################# Socket Server Settings #############################

# The address the socket server listens on. It will get the value returned from

# java.net.InetAddress.getCanonicalHostName() if not configured.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://192.168.1.104:9092

# Hostname and port the broker will advertise to producers and consumers. If not set,

# it uses the value for "listeners" if configured. Otherwise, it will use the value

# returned from java.net.InetAddress.getCanonicalHostName().

advertised.listeners=PLAINTEXT://192.168.1.104:9092

hostname=192.168.1.104

# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

#listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

# The number of threads handling network requests

num.network.threads=3

# The number of threads doing disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400

# The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600

############################# Log Basics #############################

# A comma seperated list of directories under which to store log files

log.dirs=/tmp/kafka-logs

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1

# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1

############################# Log Flush Policy #############################

# Messages are immediately written to the filesystem but by default we only fsync() to sync

# the OS cache lazily. The following configurations control the flush of data to disk.

# There are a few important trade-offs here:

# 1. Durability: Unflushed data may be lost if you are not using replication.

# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to exceessive seeks.

# The settings below allow one to configure the flush policy to flush data after a period of time or

# every N messages (or both). This can be done globally and overridden on a per-topic basis.

# The number of messages to accept before forcing a flush of data to disk

#log.flush.interval.messages=10000

# The maximum amount of time a message can sit in a log before we force a flush

#log.flush.interval.ms=1000

############################# Log Retention Policy #############################

# The following configurations control the disposal of log segments. The policy can

# be set to delete segments after a period of time, or after a given size has accumulated.

# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

# from the end of the log.

# The minimum age of a log file to be eligible for deletion due to age

log.retention.hours=168

# A size-based retention policy for logs. Segments are pruned from the log as long as the remaining

# segments don't drop below log.retention.bytes. Functions independently of log.retention.hours.

#log.retention.bytes=1073741824

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=192.168.1.104:2181

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

3.启动kafka

启动zookeeper:nohup bin/zookeeper-server-start.sh config/zookeeper.properties 1>zookeeper.log 2>zookeeper.err &

启动kafka:nohup bin/kafka-server-start.sh config/server.properties &4.单机测试:

(1)生产者

bin/kafka-console-producer.sh --broker-list 192.168.1.104:9092 --topic test

输进消息: lmx(2)消费者

bin/kafka-console-consumer.sh --zookeeper 192.168.1.104:2181 --topic test --from-beginning

收到消息: lmx4.JAVA代码测试:

(1)配置类:ConfigureAPI.class

package kafkaDemo;

public class ConfigureAPI

{

public final static String GROUP_ID = "test";

public final static String TOPIC = "test-lmx";

public final static int BUFFER_SIZE = 64 * 1024;

public final static int TIMEOUT = 20000;

public final static int INTERVAL = 10000;

public final static String BROKER_LIST = "192.168.1.104:9092,192.168.1.105:9092";

// 去数据间隔

public final static int GET_MEG_INTERVAL = 1000;

}( 2 ) 生产者类:JProducer.class

package kafkaDemo;

import java.util.Properties;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerRecord;

public class JProducer implements Runnable

{

private Producer<String, String> producer;

public JProducer()

{

Properties props = new Properties();

props.put("bootstrap.servers", ConfigureAPI.BROKER_LIST);

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", 1);

props.put("buffer.memory", 33554432);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("request.required.acks", "-1");

producer = new KafkaProducer<String, String>(props);

}

@Override

public void run()

{

// TODO Auto-generated method stub

try

{

String data = "hello lmx!";

producer.send(new ProducerRecord<String, String>(ConfigureAPI.TOPIC, data));

System.out.println(data);

}

catch (Exception e)

{

// TODO: handle exception

e.getStackTrace();

}

finally

{

producer.close();

}

}

public static void main(String[] args)

{

ExecutorService threadPool = Executors.newCachedThreadPool();

threadPool.execute(new JProducer());

threadPool.shutdown();

}

}执行效果:

package kafkaDemo;

import java.util.Arrays;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import kafka.serializer.StringDecoder;

import kafka.utils.VerifiableProperties;

public class JConsumer implements Runnable

{

private KafkaConsumer<String, String> consumer;

private JConsumer()

{

Properties props = new Properties();

props.put("bootstrap.servers", ConfigureAPI.BROKER_LIST);

props.put("group.id", ConfigureAPI.GROUP_ID);

props.put("enable.auto.commit", true);

props.put("auto.commit.interval.ms", 1000);

props.put("session.timeout.ms", 30000);

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer","org.apache.kafka.common.serialization.StringDeserializer");

consumer = new KafkaConsumer<String, String>(props);

consumer.subscribe(Arrays.asList(ConfigureAPI.TOPIC)); // 多个topic逗号隔开

}

@Override

public void run()

{

// TODO Auto-generated method stub

while (true)

{

System.out.println("poll Server message");

ConsumerRecords<String, String> records = consumer.poll(ConfigureAPI.GET_MEG_INTERVAL);

for (ConsumerRecord<String, String> record : records)

{

handleMeg(record.value());

}

}

}

private void handleMeg(String record)

{

System.out.println(record);

}

public static void main(String[] args)

{

ExecutorService threadPool = Executors.newCachedThreadPool();

threadPool.execute(new JConsumer());

threadPool.shutdown();

}

}执行效果:

JAVA代码demo下载地址:http://download.csdn.net/download/liangmaoxuan/10258460

附属某些错误解决办法:

(1) 错误:Unable to connect to zookeeper server '192.168.1.104:2181' with timeout of 4000 ms

解决办法:

1.防火墙要关闭

使用service iptables stop 关闭防火墙

使用service iptables status确认

使用chkconfig iptables off禁用防火墙 2.只打开2181端口

iptables -I INPUT -p tcp --dport 2181 -j ACCEPT(2) 错误:kafka Failed to send messages after 3 tries

解决办法:

修改server.properties

listeners=PLAINTEXT://192.168.1.104:9092

advertised.listeners=PLAINTEXT://192.168.1.104:9092

hostname=192.168.1.104

总结不好多多担待,文章只单纯个人总结,如不好勿喷,技术有限,有错漏麻烦指正提出。本人QQ:373965070

如果觉得我的文章对您有用,请随意打赏。你的支持将鼓励我继续创作!