社区微信群开通啦,扫一扫抢先加入社区官方微信群

社区微信群

社区微信群开通啦,扫一扫抢先加入社区官方微信群

社区微信群

环境:

hive需要提前安装好;

1.依赖,注意解决冲突.这里可以使用IDEA的maven help插件或者Diagrams查看冲突

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.13.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.cntaiping.tpi</groupId>

<artifactId>chezhufuwu</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>chezhufuwu</name>

<description>车主服务提醒</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- 添加hive依赖 -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>2.1.1</version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.aggregate</groupId>

<artifactId>*</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<artifactId>commons-logging</artifactId>

<groupId>commons-logging</groupId>

</exclusion>

<exclusion>

<groupId>org.json</groupId>

<artifactId>json</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- <dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-hadoop</artifactId>

<version>2.5.0.RELEASE</version>

</dependency>-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.5</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- 老版本如v1.0.20不支持hive conn.getHoldability()-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.21</version>

</dependency>

<!-- CURD-->

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<!-- ConfigurationProperties-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

2.hive配置文件

2.1 在yml里面或者可以自定义配置文件,然后引入也可以

hive:

url: jdbc:hive2://10.28.xxx.xxx:10000/xxxxx

driver-class-name: org.apache.hive.jdbc.HiveDriver

type: com.alibaba.druid.pool.DruidDataSource

user: xxxx

password: xxxx

# 下面为连接池的补充设置,应用到上面所有数据源中

# 初始化大小,最小,最大

initialSize: 1

minIdle: 3

maxActive: 20

# 配置获取连接等待超时的时间

maxWait: 60000

# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

timeBetweenEvictionRunsMillis: 60000

# 配置一个连接在池中最小生存的时间,单位是毫秒

minEvictableIdleTimeMillis: 30000

validationQuery: select 1

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

# 打开PSCache,并且指定每个连接上PSCache的大小

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

然后写配置类

package com.cntaiping.tpi.chezhufuwu.config;

import javax.sql.DataSource;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.jdbc.core.JdbcTemplate;

import com.alibaba.druid.pool.DruidDataSource;

// 默认文件路径是加载yml或者prop下面的 set/get方法必须的

@Configuration

@ConfigurationProperties(prefix = "hive")

public class HiveDruidConfig {

private String url;

private String user;

private String password;

private String driverClassName;

private int initialSize;

private int minIdle;

private int maxActive;

private int maxWait;

private int timeBetweenEvictionRunsMillis;

private int minEvictableIdleTimeMillis;

private String validationQuery;

private boolean testWhileIdle;

private boolean testOnBorrow;

private boolean testOnReturn;

private boolean poolPreparedStatements;

private int maxPoolPreparedStatementPerConnectionSize;

public HiveDruidConfig() {

}

@Bean(name = "hiveDruidDataSource")

@Qualifier("hiveDruidDataSource")

public DataSource dataSource() {

DruidDataSource datasource = new DruidDataSource();

datasource.setUrl(url);

datasource.setUsername(user);

datasource.setPassword(password);

datasource.setDriverClassName(driverClassName);

// pool configuration

datasource.setInitialSize(initialSize);

datasource.setMinIdle(minIdle);

datasource.setMaxActive(maxActive);

datasource.setMaxWait(maxWait);

datasource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

datasource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

datasource.setValidationQuery(validationQuery);

datasource.setTestWhileIdle(testWhileIdle);

datasource.setTestOnBorrow(testOnBorrow);

datasource.setTestOnReturn(testOnReturn);

datasource.setPoolPreparedStatements(poolPreparedStatements);

datasource.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

return datasource;

}

@Bean(name = "hiveDruidTemplate")

public JdbcTemplate hiveDruidTemplate(@Qualifier("hiveDruidDataSource") DataSource dataSource) {

return new JdbcTemplate(dataSource);

}

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

public String getUser() {

return user;

}

public void setUser(String user) {

this.user = user;

}

public String getPassword() {

return password;

}

public void setPassword(String password) {

this.password = password;

}

public String getDriverClassName() {

return driverClassName;

}

public void setDriverClassName(String driverClassName) {

this.driverClassName = driverClassName;

}

public int getInitialSize() {

return initialSize;

}

public void setInitialSize(int initialSize) {

this.initialSize = initialSize;

}

public int getMinIdle() {

return minIdle;

}

public void setMinIdle(int minIdle) {

this.minIdle = minIdle;

}

public int getMaxActive() {

return maxActive;

}

public void setMaxActive(int maxActive) {

this.maxActive = maxActive;

}

public int getMaxWait() {

return maxWait;

}

public void setMaxWait(int maxWait) {

this.maxWait = maxWait;

}

public int getTimeBetweenEvictionRunsMillis() {

return timeBetweenEvictionRunsMillis;

}

public void setTimeBetweenEvictionRunsMillis(int timeBetweenEvictionRunsMillis) {

this.timeBetweenEvictionRunsMillis = timeBetweenEvictionRunsMillis;

}

public int getMinEvictableIdleTimeMillis() {

return minEvictableIdleTimeMillis;

}

public void setMinEvictableIdleTimeMillis(int minEvictableIdleTimeMillis) {

this.minEvictableIdleTimeMillis = minEvictableIdleTimeMillis;

}

public String getValidationQuery() {

return validationQuery;

}

public void setValidationQuery(String validationQuery) {

this.validationQuery = validationQuery;

}

public boolean isTestWhileIdle() {

return testWhileIdle;

}

public void setTestWhileIdle(boolean testWhileIdle) {

this.testWhileIdle = testWhileIdle;

}

public boolean isTestOnBorrow() {

return testOnBorrow;

}

public void setTestOnBorrow(boolean testOnBorrow) {

this.testOnBorrow = testOnBorrow;

}

public boolean isTestOnReturn() {

return testOnReturn;

}

public void setTestOnReturn(boolean testOnReturn) {

this.testOnReturn = testOnReturn;

}

public boolean isPoolPreparedStatements() {

return poolPreparedStatements;

}

public void setPoolPreparedStatements(boolean poolPreparedStatements) {

this.poolPreparedStatements = poolPreparedStatements;

}

public int getMaxPoolPreparedStatementPerConnectionSize() {

return maxPoolPreparedStatementPerConnectionSize;

}

public void setMaxPoolPreparedStatementPerConnectionSize(int maxPoolPreparedStatementPerConnectionSize) {

this.maxPoolPreparedStatementPerConnectionSize = maxPoolPreparedStatementPerConnectionSize;

}

}

然后写一个测试方法,这里使用模板进行查询;

public class ResultDto {

private String policyno;

private String agentid;

....省

}

import com.cntaiping.tpi.chezhufuwu.bean.ResultDto;

import com.cntaiping.tpi.chezhufuwu.service.ChezhufuwuService;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.jdbc.core.BeanPropertyRowMapper;

import org.springframework.jdbc.core.JdbcTemplate;

import org.springframework.stereotype.Service;

import java.util.List;

import java.util.Map;

/**

* Created by yusy02 on 2020/04/12 22:27

*/

@Service

public class ChezhufuwuServiceImpl implements ChezhufuwuService {

private static final Logger logger = LoggerFactory.getLogger(HiveJdbcTemplateController.class);

@Autowired

@Qualifier("hiveDruidTemplate")

private JdbcTemplate hiveDruidTemplate;

@Override

public String pushData() {

String sql="select policyno,agentid from gupolicycusclaims limit 10";

//返回Map

//List<Map<String, Object>> maps = hiveDruidTemplate.queryForList(sql);

//返回对象

//List<ResultDto> resultDtos = hiveDruidTemplate.query(sql, (rs,rownum)->{

// return new ResultDto(rs.getString("policyno"),rs.getString("agentid"));

//});

List<ResultDto> resultDtos = hiveDruidTemplate.query(sql, new BeanPropertyRowMapper<>(ResultDto.class));

resultDtos.forEach(System.out::print);

//System.out.println(maps);

return "ok";

}

}

//查询结果如何转对象:https://www.cnblogs.com/silyvin/p/9106797.html

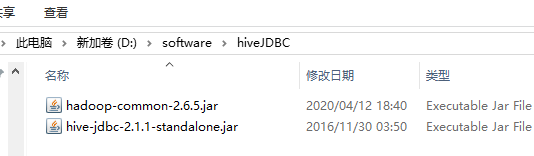

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.5</version>

</dependency>

然后随便放在一个位置;

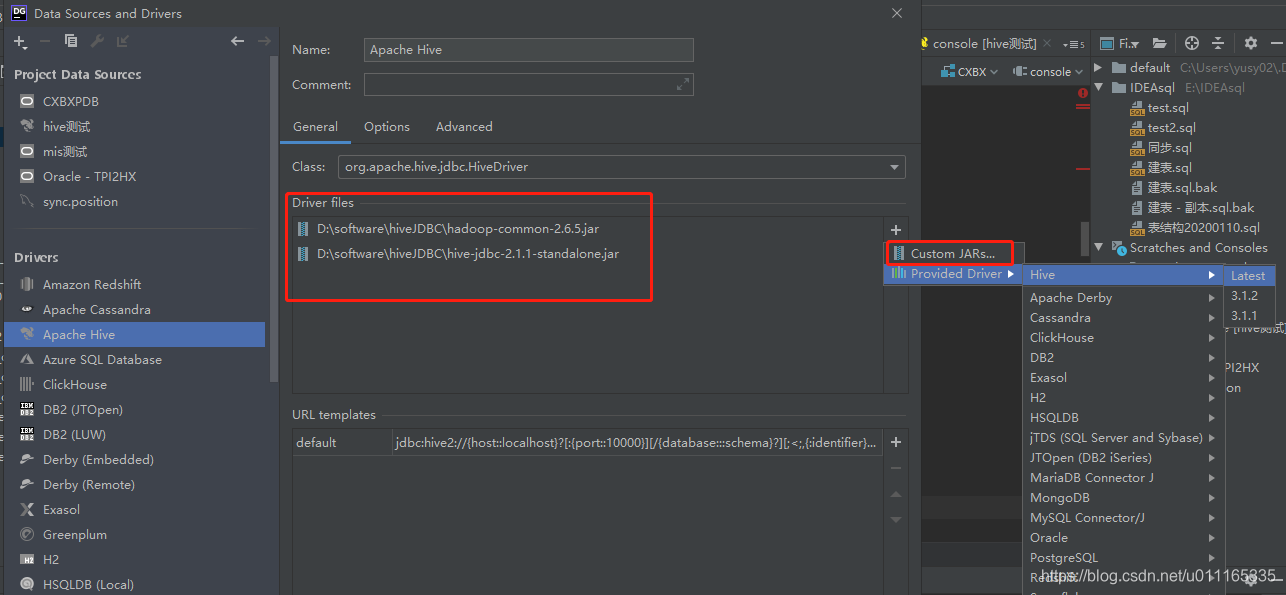

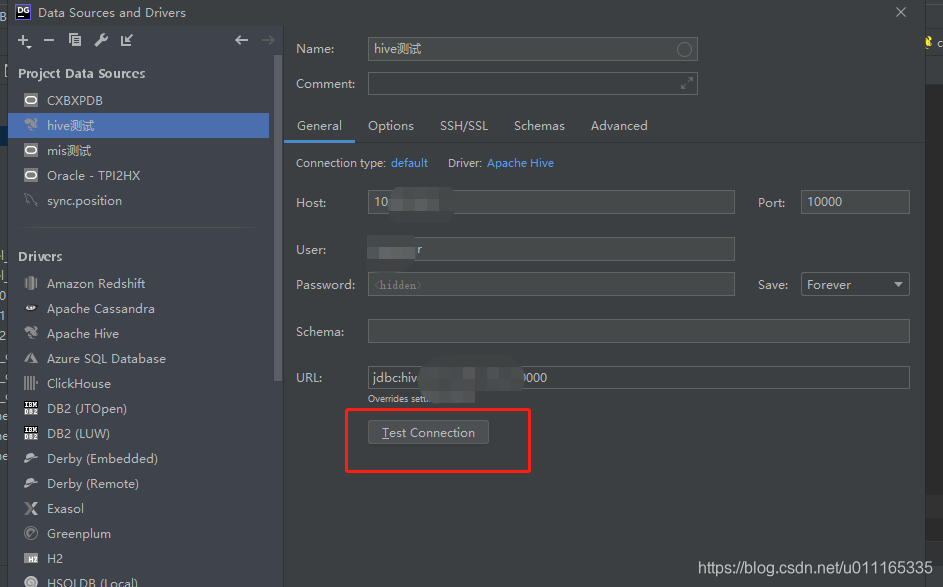

打开datagrip,因为DG默认下载的驱动都是最新的,这里看到当前最新的是3.1.2,但是我们需要的是2.1.1,所以需要自定义引入驱动;

参考:

1.配置https://blog.csdn.net/pengjunlee/article/details/81838480

2.可能的错误

https://blog.csdn.net/qq_34246546/article/details/81075452

如果觉得我的文章对您有用,请随意打赏。你的支持将鼓励我继续创作!