社区微信群开通啦,扫一扫抢先加入社区官方微信群

社区微信群

社区微信群开通啦,扫一扫抢先加入社区官方微信群

社区微信群

使用百度API获取数据的实践案例,涉及的技术包括:

通过百度地图web服务API获取中国所有城市的公园数据,并且获取每一个公园具体的评分、描述等详情,最终将数据存储到MySQL数据库中。

百度地图API的URL为:http://lbsyun.baidu.com/index.php?title=webapi/guide/webservice-placeapi

网站爬虫除了可以直接进入该网站的网页进行抓取外,还可以通过网站提供的API进行抓取。

登录百度账号,创建应用,并在IP白名单的文本框中填写0.0.0.0/0,表示不想对IP做任何限制。

本项目的实施分为三个步骤:

(1)获取所有拥有公园的城市,并存储到TXT

(2)获取所有城市的公园数据,并存储到MySQL

(3)获取所有公园的详细信息,并存储到MySQL

在百度地图API中,如果需要获取数据,向指定的URL地址发送一个GET请求即可。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""

@File : GetBeijingPark.py

@Author: Xinzhe.Pang

@Date : 2019/7/18 23:49

@Desc :

"""

import requests

import json

def getjson(link, loc, page_num=0):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'}

pa = {'query': '公园',

'region': loc,

'scope': '2',

'page_size': 20,

'page_num': page_num,

'output': 'json',

'ak': '自己的AK'

}

r = requests.get(link, params=pa, headers=headers)

decodejson = json.loads(r.text)

return decodejson

link = "http://api.map.baidu.com/place/v2/search"

loc = '北京'

print(getjson(link, loc))

接下来,获取所有拥有公园的城市,并把结果写入MySQL中。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""

@File : GetCityPark.py

@Author: Xinzhe.Pang

@Date : 2019/7/19 0:07

@Desc :

"""

import requests

import json

def getjson(link, loc, page_num=0):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'}

pa = {'query': '公园',

'region': loc,

'scope': '2',

'page_size': 20,

'page_num': page_num,

'output': 'json',

'ak': '自己的AK'

}

r = requests.get(link, params=pa, headers=headers)

decodejson = json.loads(r.text)

return decodejson

link = "http://api.map.baidu.com/place/v2/search"

province_list = ['江苏省', '浙江省', '广东省', '福建省', '山东省', '河南省', '河北省', '四川省', '辽宁省', '云南省',

'湖南省', '湖北省', '江西省', '安徽省', '山西省', '广西壮族自治区', '陕西省', '黑龙江省', '内蒙古自治区',

'贵州省', '吉林省', '甘肃省', '新疆维吾尔自治区', '海南省', '宁夏回族自治区', '青海省', '西藏自治区']

for eachprovince in province_list:

decodejson = getjson(link, eachprovince)

try:

for eachcity in decodejson['results']:

city = eachcity['name']

num = eachcity['num']

print(city, num)

output = 't'.join([city, str(num)]) + 'rn'

with open('cities.txt', "a+", encoding='utf-8') as f:

f.write(output)

f.close()

except Exception as e:

print(e)

获取所有城市的公园数据,首先在Mysql数据库中创建一个baidumap数据库,用来存放所有数据。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""

@File : GetCityPark_MySQL.py

@Author: Xinzhe.Pang

@Date : 2019/7/19 20:07

@Desc :

"""

import MySQLdb

conn = MySQLdb.connect(host='***.***.***.***', user='****', passwd='***', db='****', charset='utf8')

cur = conn.cursor()

sql = """CREATE TABLE city(

id INT NOT NULL AUTO_INCREMENT,

city VARCHAR(200) NOT NULL,

park VARCHAR(200) NOT NULL,

location_lat FLOAT,

location_lng FLOAT,

address VARCHAR(200),

street_id VARCHAR(200),

uid VARCHAR(200),

created_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY(id)

);"""

cur.execute(sql)

conn.commit()

conn.close()

然后使用Python的mysqlclient库来操作MySQL数据库

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""

@File : GetCityPark_MysqlSave.py

@Author: Xinzhe.Pang

@Date : 2019/7/19 21:07

@Desc :

"""

import requests

import json

import MySQLdb

city_list = list()

with open('cities.txt', 'r', encoding='UTF-8') as txt_file:

for eachLine in txt_file:

if eachLine != "" and eachLine != "n":

fileds = eachLine.split("t")

city = fileds[0]

city_list.append(city)

txt_file.close()

conn = MySQLdb.connect(host='***.***.***.***', user='****', passwd='****', db='*****', charset='utf8')

cur = conn.cursor()

link = "http://api.map.baidu.com/place/v2/search"

def getjson(link, loc, page_num=0):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'}

pa = {'query': '公园',

'region': loc,

'scope': '2',

'page_size': 20,

'page_num': page_num,

'output': 'json',

'ak': 's**************************************SA'

}

r = requests.get(link, params=pa, headers=headers)

decodejson = json.loads(r.text)

return decodejson

for eachCity in city_list:

not_last_page = True

page_num = 0

while not_last_page:

decodejson = getjson(link, eachCity, page_num)

print(eachCity, page_num)

if decodejson['results']:

for eachone in decodejson['results']:

try:

park = eachone['name']

except:

park = None

try:

location_lat = eachone['location']['lat']

except:

location_lat = None

try:

location_lng = eachone['location']['lng']

except:

location_lng = None

try:

address = eachone['address']

except:

address = None

try:

street_id = eachone['street_id']

except:

street_id = None

try:

uid = eachone['uid']

except:

uid = None

sql = """INSERT INTO scraping.city(city,park,location_lat,location_lng,address,street_id,uid) VALUES(%s,%s,%s,%s,%s,%s,%s);"""

cur.execute(sql, (eachCity, park, location_lat, location_lng, address, street_id, uid,))

conn.commit()

page_num += 1

else:

not_last_page = False

cur.close()

conn.close()

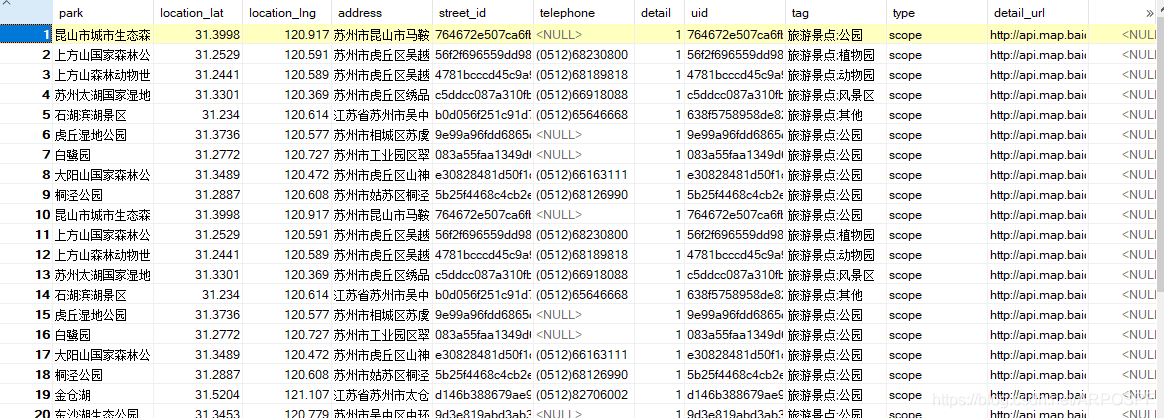

执行完成后,在MySQL数据库中查看结果:

最后,我们要获取所有公园的详细信息。首先要创建一个表格park,用来记录公园的详细信息。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""

@File : CreateCityParkInfo.py

@Author: Xinzhe.Pang

@Date : 2019/7/19 21:30

@Desc :

"""

import MySQLdb

conn = MySQLdb.connect(host='***.***.***.***', user='***', passwd='*****', db='*****', charset='utf8')

cur = conn.cursor()

sql = """CREATE TABLE park (

id INT NOT NULL AUTO_INCREMENT,

park VARCHAR(200) NOT NULL,

location_lat FLOAT,

location_lng FLOAT,

address VARCHAR(200),

street_id VARCHAR(200),

telephone VARCHAR(200),

detail INT,

uid VARCHAR(200),

tag VARCHAR(200),

type VARCHAR(200),

detail_url VARCHAR(800),

price INT,

overall_rating FLOAT,

image_num INT,

comment_num INT,

shop_hours VARCHAR(800),

alias VARCHAR(800),

keyword VARCHAR(800),

scope_type VARCHAR(200),

scope_grade VARCHAR(200),

description VARCHAR(9000),

created_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (id)

);"""

cur.execute(sql)

conn.commit()

conn.close()

接下来,就可以获取公园的详细信息了。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

"""

@File : SaveCityParkInfo.py

@Author: Xinzhe.Pang

@Date : 2019/7/19 21:52

@Desc :

"""

import requests

import json

import MySQLdb

conn = MySQLdb.connect(host='****.***.***.***', user='***', passwd='****', db='*****', charset='utf8')

cur = conn.cursor()

sql = "Select uid from scraping.city where id>0;"

cur.execute(sql)

conn.commit()

results = cur.fetchall()

link = "http://api.map.baidu.com/place/v2/detail"

def getjson(link, uid):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36'}

pa = {

'uid': uid,

'scope': '2',

'output': 'json',

'ak': 'AK'

}

r = requests.get(link, params=pa, headers=headers)

decodejson = json.loads(r.text)

return decodejson

for row in results:

uid = row[0]

decodejson = getjson(link, uid)

print(uid)

info = decodejson['result']

try:

park = info['name']

except:

park = None

try:

location_lat = info['location']['lat']

except:

location_lat = None

try:

location_lng = info['location']['lng']

except:

location_lng = None

try:

address = info['address']

except:

address = None

try:

street_id = info['street_id']

except:

street_id = None

try:

telephone = info['telephone']

except:

telephone = None

try:

detail = info['detail']

except:

detail = None

try:

tag = info['detail_info']['tag']

except:

tag = None

try:

detail_url = info['detail_info']['detail_url']

except:

detail_url = None

try:

type = info['detail_info']['type']

except:

type = None

try:

overall_rating = info['detail_info']['overall_rating']

except:

overall_rating = None

try:

image_num = info['detail_info']['image_num']

except:

image_num = None

try:

comment_num = info['detail_info']['comment_num']

except:

comment_num = None

try:

key_words = ''

key_words_list = info['detail_info']['di_review_keyword']

for eachone in key_words_list:

key_words = key_words + eachone['keyword'] + '/'

except:

key_words = None

try:

shop_hours = info['detail_info']['shop_hours']

except:

shop_hours = None

try:

alias = info['detail_info']['alias']

except:

alias = None

try:

scope_type = info['detail_info']['scope_type']

except:

scope_type = None

try:

scope_grade = info['detail_info']['scope_grade']

except:

scope_grade = None

try:

description = info['detail_info']['description']

except:

description = None

sql = """INSERT INTO scraping.park

(park, location_lat, location_lng, address, street_id, uid, telephone, detail, tag, detail_url, type, overall_rating, image_num,

comment_num, keyword, shop_hours, alias, scope_type, scope_grade, description)

VALUES

(%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s);"""

cur.execute(sql, (park, location_lat, location_lng, address, street_id, uid, telephone, detail, tag, detail_url,

type, overall_rating, image_num, comment_num, key_words, shop_hours, alias, scope_type,

scope_grade, description,))

conn.commit()

cur.close()

conn.close()

使用Mysql-Front查看数据表中的数据:

在代码执行过程中,会出现异常情况,可以在之后的代码中加入异常判断。

参考资料:《Python网络爬虫从入门到实践》

如果觉得我的文章对您有用,请随意打赏。你的支持将鼓励我继续创作!